Writing with AI: The Double-Edged Sword of Convenience

Written on

Chapter 1: The Analogy of Performance Enhancement

In this brief discussion, I invite you to delve into the impact of Large Language Models (LLMs) such as GPT-4, Microsoft Copilot, Copy.ai, and Claude on writing skills and cognitive functions. I will illustrate this by comparing the influence of anabolic steroids on athletic performance with the detrimental effects of excessive dependence on AI-generated content.

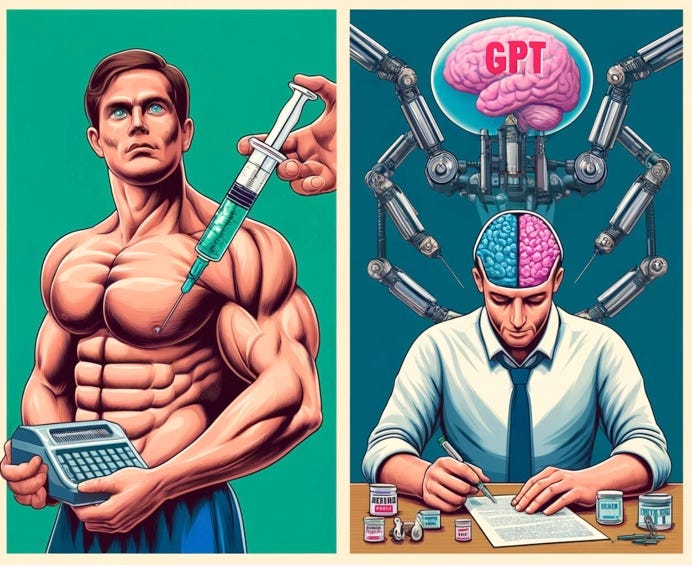

Consider the image above, where an LLM's mechanical hand ominously grips a syringe filled with "GPT steroids," injecting it into a writer's mind. This starkly mirrors athletes and bodybuilders who inject steroids, hoping to achieve impressive but ultimately misleading physical enhancements.

Both writers and athletes may experience superficial gains through over-reliance on GPTs and anabolic steroids, yet both face significant negative consequences. I argue that "GPT steroids" can impair a writer's cognitive abilities, diminish their creative agency, and stifle their imaginative capacities.

Section 1.1: The Impact of Anabolic Steroids

Let’s break down the elements of this analogy, beginning with the human body’s response to synthetic steroids. According to clevelandclinic.org, anabolic androgenic steroids are “synthetic substances that mimic the effects of male hormone testosterone.” They can be administered through various methods, including pills, injections, and skin patches.

When used under medical supervision, these steroids can effectively treat conditions such as chronic pain, low testosterone levels, certain types of breast cancer, and more. This medical application highlights advancements that improve patients’ health and quality of life.

However, the narrative shifts dramatically when steroids are misused for non-medical purposes, such as muscle building or enhancing athletic performance. Such misuse can lead to severe and potentially life-threatening complications, including heart attacks, strokes, liver tumors, kidney failure, and mental health issues like depression and addiction.

While users may temporarily believe they have achieved peak physicality or performance, these are often illusions. The reality is that what appears to be enhanced performance is merely a façade, masking a troubling lack of genuine ability.

Subsection 1.1.1: The Case for "GPT Steroids"

The analogy may still be developing, as steroids are physical substances, while "GPT steroids" are digital tools. To utilize GPT steroids, one simply logs into a LLM, inputs a prompt, and allows the model to do the heavy lifting.

By employing these AI tools, you can produce substantial volumes of text quickly. If you encounter writer's block, GPTs can provide immediate relief. The output is often polished in terms of grammar, punctuation, and style.

While it’s beneficial to use LLMs for brainstorming, outlining, and refining language, problems arise when you allow them to dominate your writing process. When you cede control to these models, you risk becoming a passive participant, outsourcing your creativity and critical thinking to an external source.

As reliance on these models intensifies, several detrimental effects can occur:

- Your writing may become bland and uniform.

- Your creative and original thought processes may diminish.

- Your ability to think critically may decline, making you overly reliant on AI for solutions.

- You may lose the desire to cultivate your distinct voice as a writer.

The more you let AI steer your writing, the more disconnected you may become from the fundamental practices of reading, writing, and engaging with ideas.

Section 1.2: The Loss of Human Agency

One of the most alarming consequences of excessive reliance on AI is the potential erosion of individual agency. When an external entity begins to dictate your thoughts and actions, you lose the ability to make choices and influence your own life.

As AI takes over your cognitive processes, it generates responses and meanings that you may not have fully internalized. This disconnection creates a sense of detachment from the material, leading to a lack of authenticity in your work. Claiming ownership of a text largely produced by AI is inherently misleading.

When you present AI-generated content as your own, you suggest that it reflects your thoughts and emotions. This can create a facade of competence that, upon closer inspection, reveals a lack of genuine understanding and insight.