Efficiently Parsing Large JSON Files in .NET: A Comprehensive Guide

Written on

Chapter 1: Introduction to JSON Parsing

In the realm of programming, especially when dealing with large JSON files, finding practical guidance can be challenging. Encountering a 90GB JSON file often leads to discouraging responses suggesting that parsing such a massive file is either impossible or impractical. Responses on platforms like StackOverflow and Quora often include statements like, “You must load the entire file into memory,” or “It’s not feasible due to JSON’s structure.”

However, these claims are misguided. While I generally refrain from writing tutorials, the intriguing nature of this topic compels me to make an exception.

The specific client project that motivated this discussion was developed in Java, but I will present the solution using .NET, which is my preferred programming environment. The methodologies remain largely consistent across both platforms.

Want more articles like this? Sign up here.

What You Will Learn

Depending on your familiarity with JSON, streams, and zip files, some sections of this article may be more relevant than others. The following topics will be covered:

- Parsing JSON files as streams in real-time.

- Parsing JSON data contained within a zip archive.

- Streaming and parsing a zip file received over the network.

View on GitHub.

Chapter 2: Real-World Challenges

Before delving into the technical details, let’s consider some real-world scenarios. In a client project, we managed large zip files, ranging from 600MB to 2GB (with extracted sizes of up to 90GB) stored on an FTP server. Due to storage limitations, downloading and storing these files locally was not an option.

The solution involved streaming the zipped files and parsing the embedded JSON data as it was received. Surprisingly, this turned out to be much simpler than anticipated.

How We Tackled the Problem

When confronted with a new challenge, I prefer to develop small, easily modifiable prototypes through unit testing. Starting from basic concepts, I progressively work towards the complete solution. This prototyping approach yields rapid feedback and minimizes development costs, allowing for quick validation of my assumptions. Often, misalignments and rework stem from incorrect initial assumptions.

Parsing JSON Files as Streams

Once you acquire a zip stream and identify the zip entry containing a JSON file, you can treat it as a standard file stream containing JSON data. This was a surprising realization for me, as I do not frequently work with zip files.

For those unfamiliar with streams, particularly JSON streams, let’s take a moment to understand the basic handling of streams containing JSON data.

Note that I will not cover the elementary task of deserializing a small JSON string into typed objects, as this is widely documented.

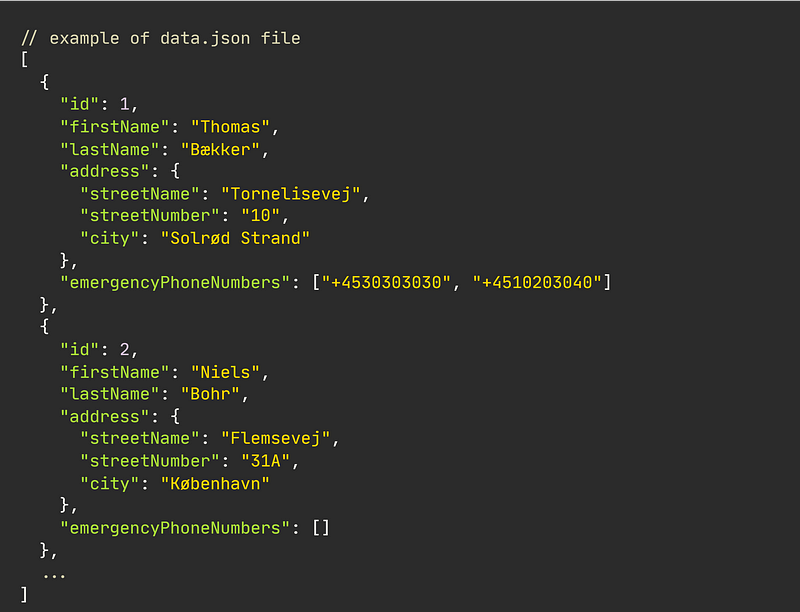

Consider the following JSON structure in a typical .json file.

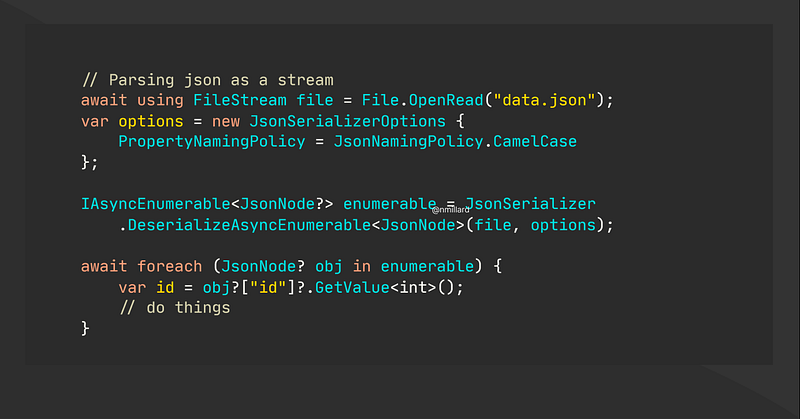

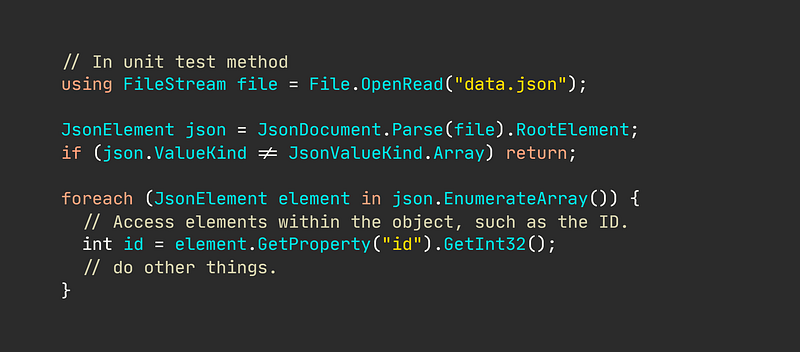

When reading this JSON stream, open the file to create a FileStream, then pass this stream to JsonDocument or JsonNode, utilizing their respective Parse(stream) methods. This utilizes the modern System.Text.Json approach, with the Newtonsoft method following shortly after.

While working with data streams, it's crucial to validate assumptions prior to parsing, such as confirming whether the current element is an array. This method works seamlessly with smaller JSON datasets, but may lead to application crashes when handling substantial data volumes. The JsonDocument.Parse(stream) method reads the entire stream at once, which contradicts the purpose of using streams.

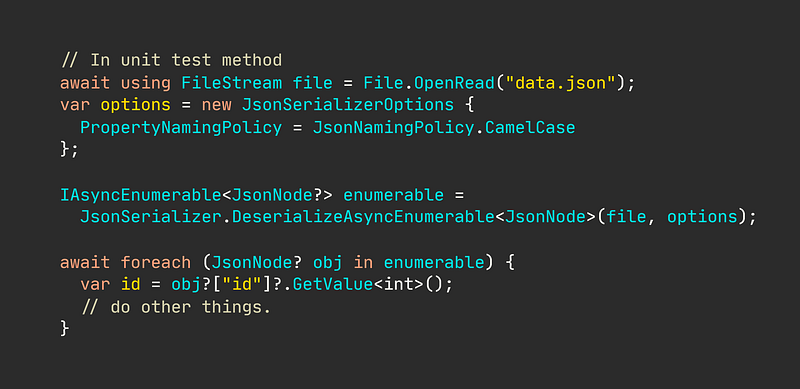

To prevent application crashes due to unpredictable dataset sizes, minor adjustments to the implementation are necessary, as illustrated below.

The distinction between this approach and previous ones lies in the use of an async enumerable, which reads the stream only as you loop through the JSON array.

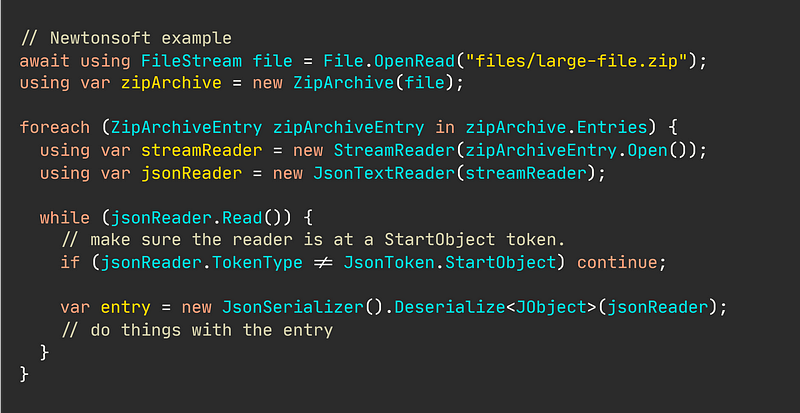

Now, using Newtonsoft, which is perhaps the most prevalent JSON library for .NET, the method appears as follows.

Keep in mind that the reader must advance and verify that the current token corresponds to JsonToken.StartObject.

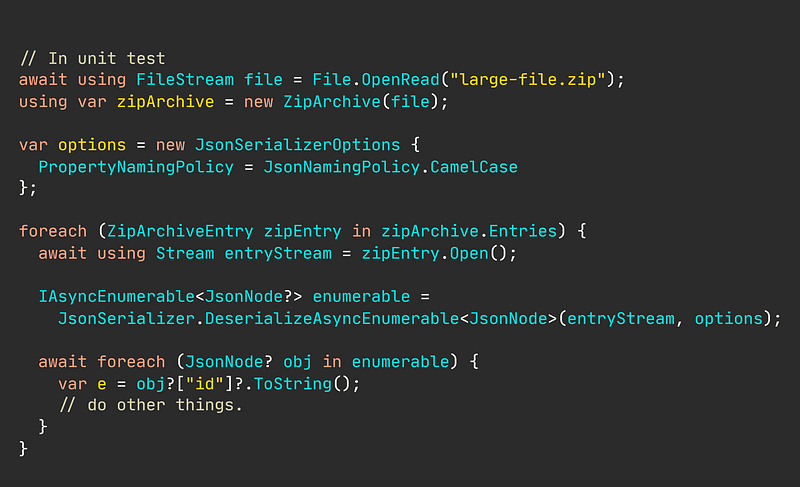

Chapter 3: Parsing Zipped JSON Files

Understanding how to open a JSON file and parse its contents in real-time using an async stream sets the stage for tackling zipped JSON files. The approach remains largely unchanged; simply pass the file stream to a ZipArchive and iterate through its entries. If you know the zip archive contains only one file, you could use zipArchive.Entries.First() instead of looping through all entries.

A major advantage of streaming a zip file's content is the ability to avoid zip bombs—files that contain highly compressed data that could crash applications or render systems unresponsive. Therefore, it’s prudent to assess the size of a zip entry when the archive originates from an untrusted source.

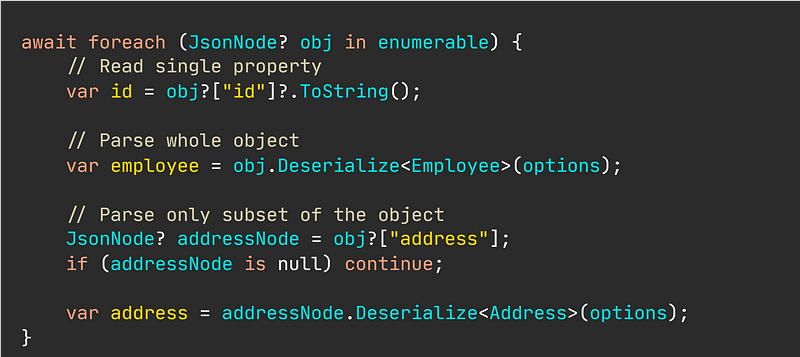

Depending on your requirements, you may need to deserialize the JSON object differently. Typically, you might:

- Require the entire JSON object to be deserialized.

- Extract a single property from the JSON object.

- Only need a subsection of the JSON.

Deserializing the entire object in the latter cases may lead to unnecessary resource consumption. Below is an illustration of these different approaches.

Chapter 4: Streaming Zipped JSON Over a Network

Imagine downloading a zipped JSON file over a network while needing to parse the embedded JSON on the fly. This scenario, while uncommon, is quite feasible. The solution is surprisingly straightforward and closely resembles our previous examples.

Notice how we initiate a standard GetStreamAsync() call and pass the resulting stream to the ZipArchive.

And that’s all there is to it.

A Word of Caution

You may encounter compatibility issues. My approach is effective for zip files compressed using DEFLATE (RFC 1951), which is the most widely utilized compression algorithm today, natively supported by both Windows and macOS. DEFLATE is also part of the ISO/IEC 21320 standard for document container files. I cannot guarantee this technique will work with other compression types, but it is likely to be effective with the DEFLATE method.

In Summary

Parsing JSON as a stream is not as complex as it may seem, contrary to the discouraging responses found on StackOverflow. The manner in which you handle streamed content can significantly influence memory usage, particularly if you deserialize a large object instead of extracting a single property. Additionally, it’s crucial to prevent extracting a zip bomb by checking the size of each zip entry beforehand to ensure your system's stability.

Want more articles like this? Sign up here.

Let’s Stay Connected!

Stay informed about similar articles by signing up for my newsletter and check out my YouTube channel (@Nicklas Millard). Connect on LinkedIn.

Resources for the Curious

Visit the GitHub repository maintained by the author, Nicklas Millard.

This video titled "HƯỚNG DẪN TỐI ƯU LDPLAYER CHI TIẾT TỪ A ĐẾN Z" provides further insights and optimization techniques for handling JSON parsing and streaming effectively.